Amy Pavel

Assistant Professor

University of Texas at Austin

Computer Science

Email apavel@cs.utexas.edu

Office GDC 3.704

Twitter @amypavel

Publications Google Scholar

Curriculum Vitae PDF

People

Ph.D. Students: Mina Huh, Karim Benharrak, Yi-Hao Peng (co-advised with Jeffrey P. Bigham)

Masters, Undergraduates, and RAs: Ananya G M, Aadit Barua, Akhil Iyer, Yuning Zhang, Doeun Lee, Tess Van Daele, Pranav Venkatesh

Recent Alumni: Daniel Killough, Jalyn Derry, Aochen Jiao, Soumili Kole, Chitrank Gupta

I am an Assistant Professor in the Department of Computer Science at The University of Texas at Austin. I am recruiting Ph.D. students for Fall 2024. If you are a prospective graduate student or postdoc, please feel free to get in touch!

Previously, I was a postdoctoral fellow at Carnegie Mellon University (supervised by Jeff Bigham) and a Research Scientist at Apple. I received my PhD from the department of Electrical Engineering and Computer Science at UC Berkeley, advised by professors Björn Hartmann at UC Berkeley and Maneesh Agrawala at Stanford. My PhD work was supported by an NDSEG fellowship, a departmental Excellence Award, and a Sandisk Fellowship.

I regularly teach a Computer Science class covers the design and development of user interfaces (Introduction to Human-Computer Interaction). Prior versions of this class include CS160 at UC Berkeley in Summer 2018 and CS378 at UT Austin in Spring 2022.

Research Summary

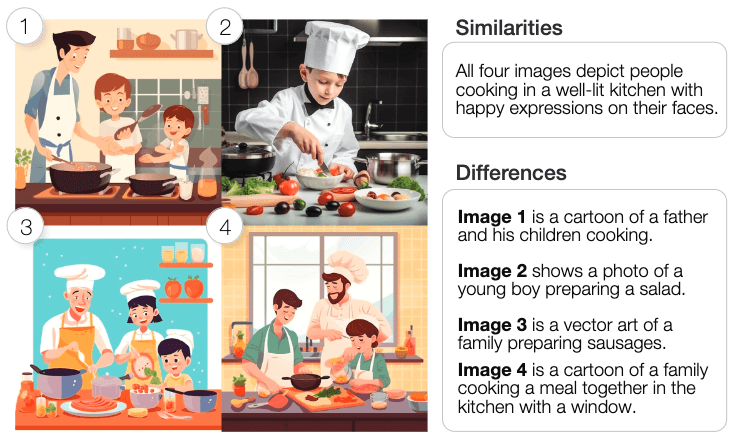

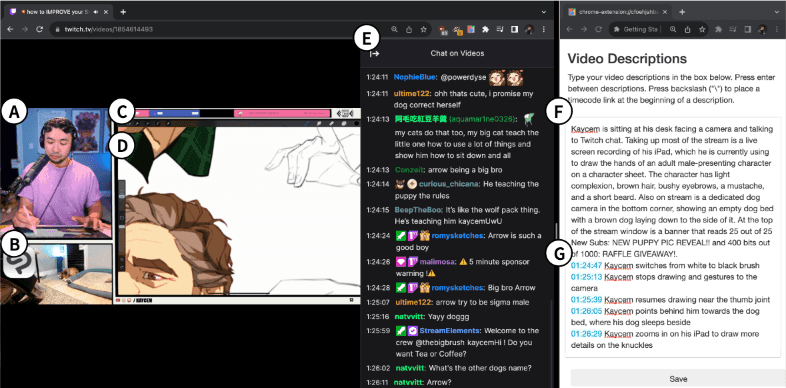

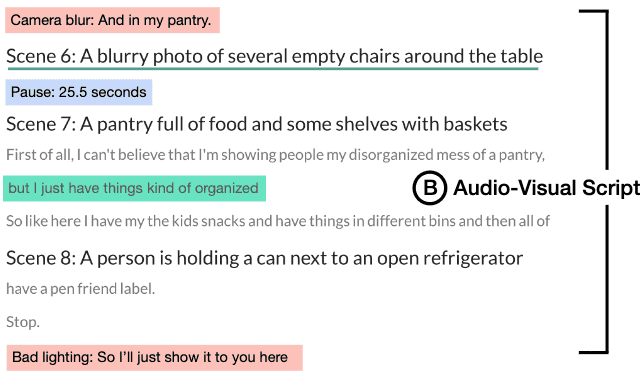

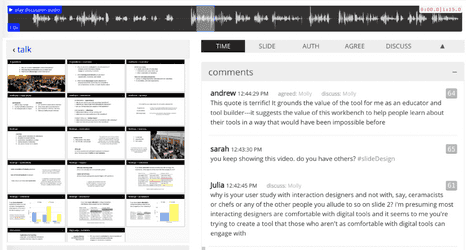

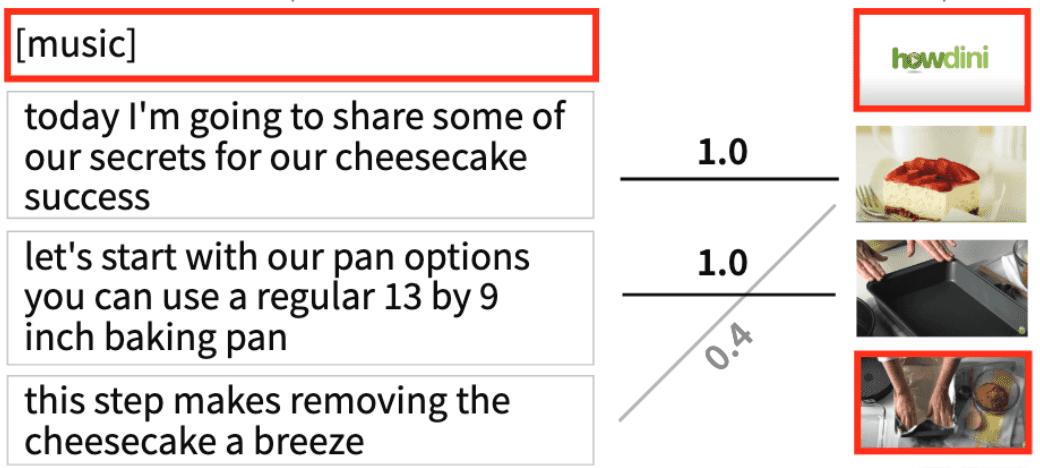

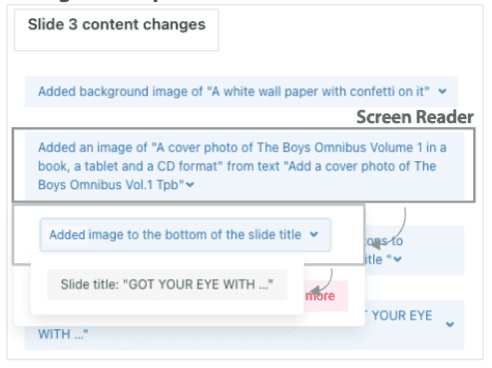

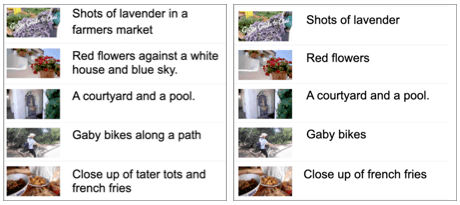

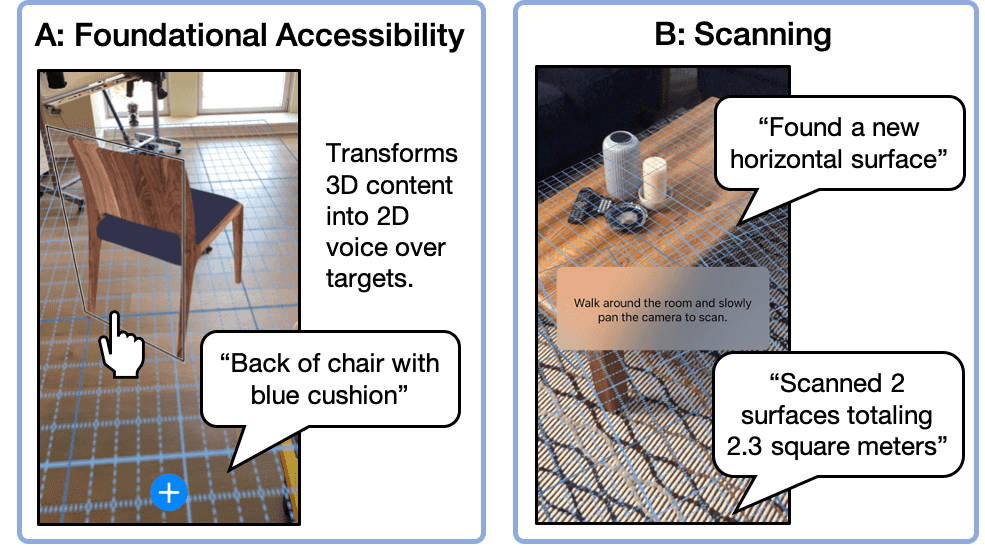

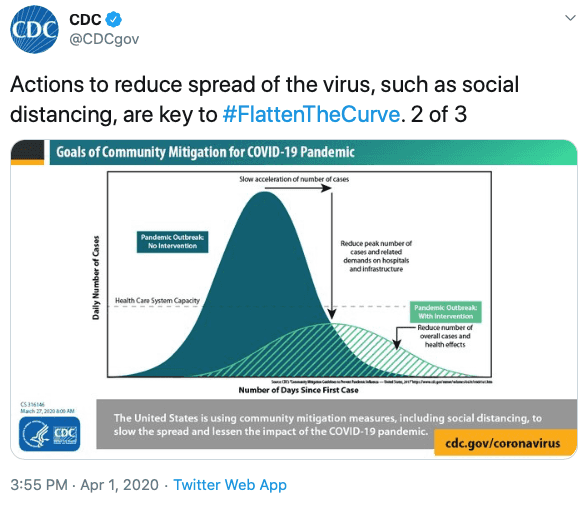

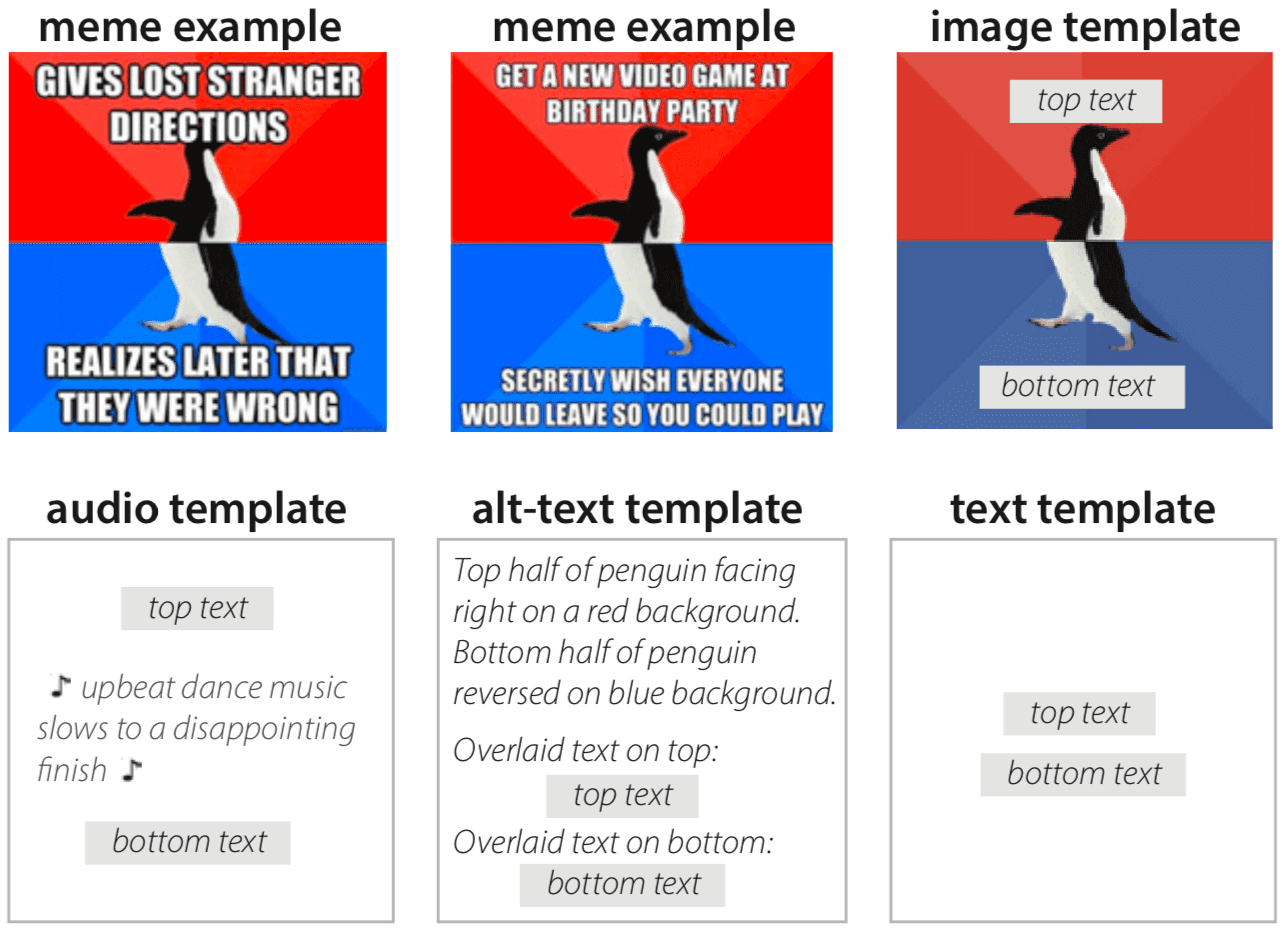

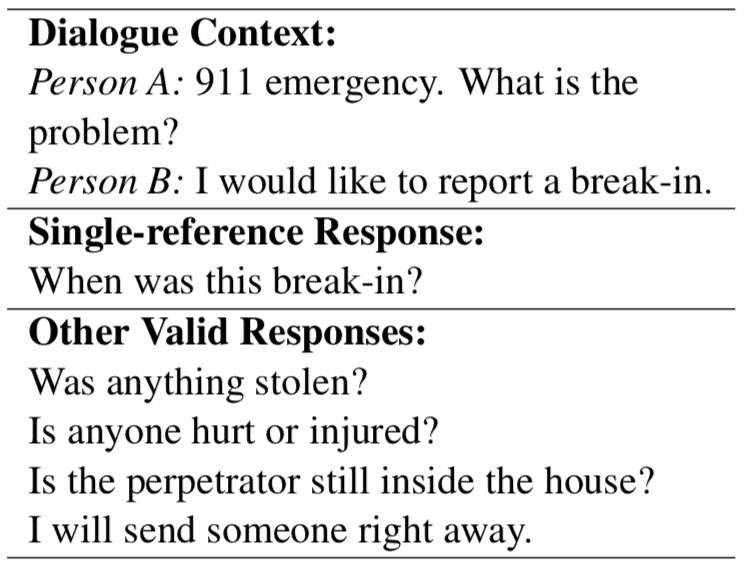

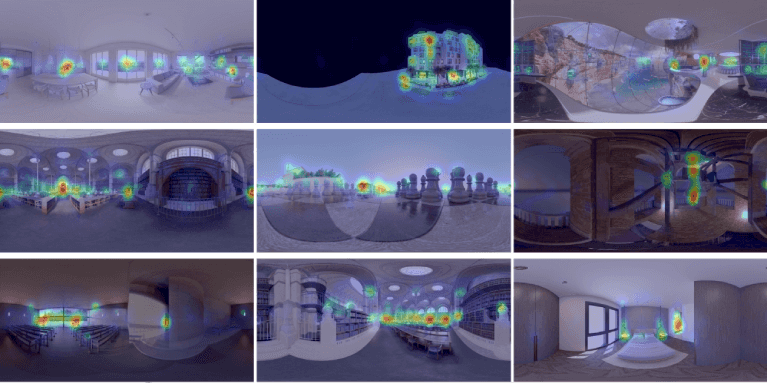

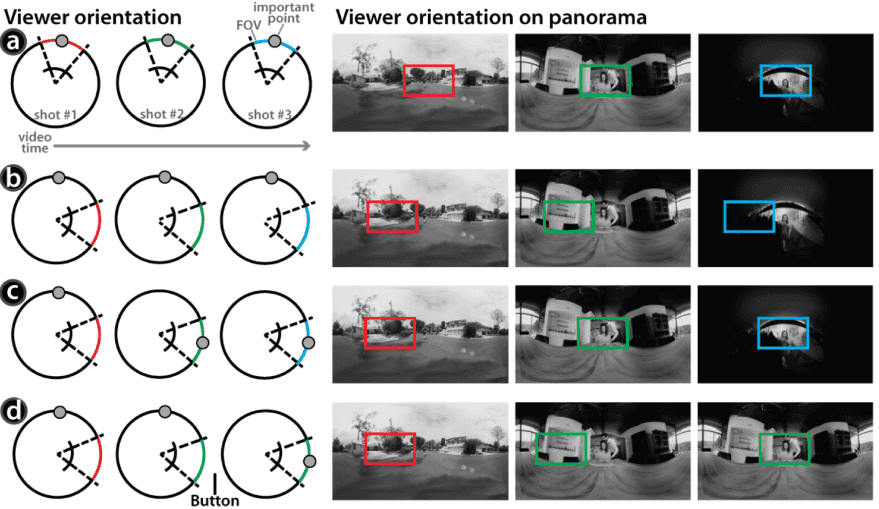

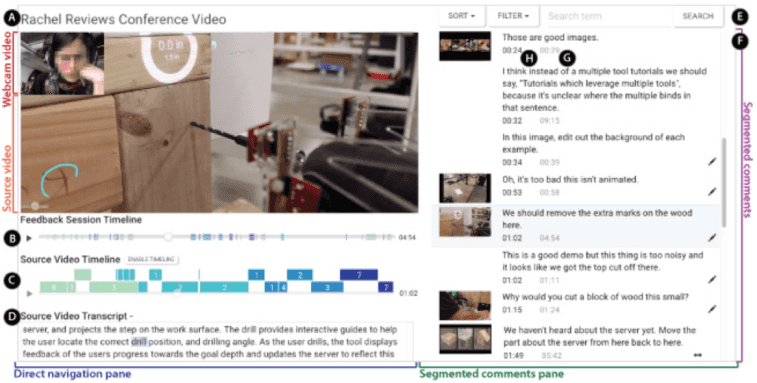

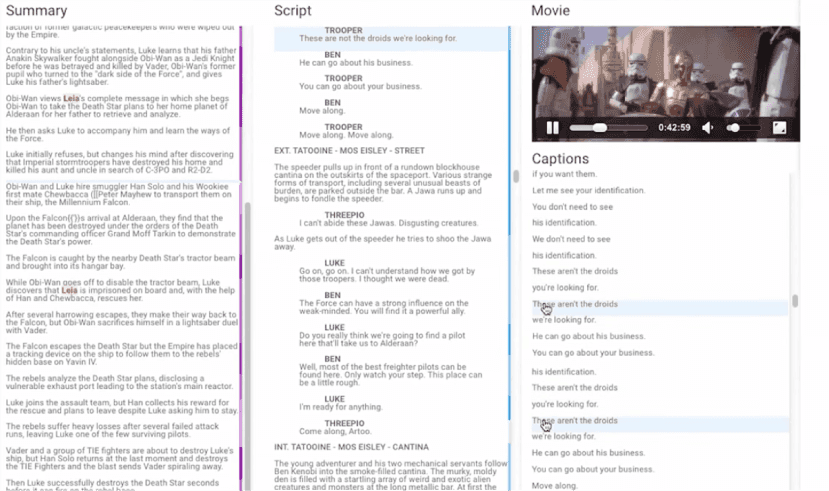

As a systems researcher in Human-Computer Interaction, I embed machine learning technologies (e.g., Natural Language Processing) into new human interactions that I then deploy to test. Using my systems, remote content creators more effectively collaborate, video authors efficiently create accessible descriptions for blind users, and instructors help students to learn and retain key points. To inform future systems that capture what is important to domain experts and people with disabilities, I also conduct and collaborate on in-depth qualitative (e.g., AAC communication, memes) and quantitative studies (e.g., 360° Video, VR Saliency). My long term research goal is to make communication more effective and accessible.

Research Papers

Tess Van Daele, Akhil Iyer, Yuning Zhang, Jalyn Derry, Mina Huh, Amy Pavel

Conditionally Accepted to CHI 2024

PDF coming soon

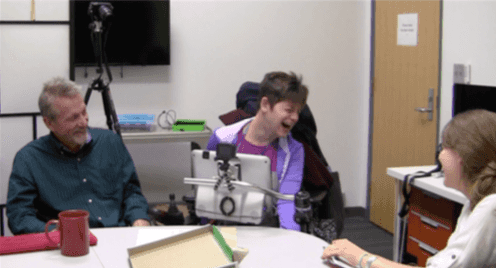

Stephanie Valencia, Jessica Huynh, Emma Y Jiang, Yufei Wu, Teresa Wan, Zixuan Zheng, Henny Admoni, Jeffrey P. Bigham, Amy Pavel

Conditionally Accepted to CHI 2024

PDF coming soon

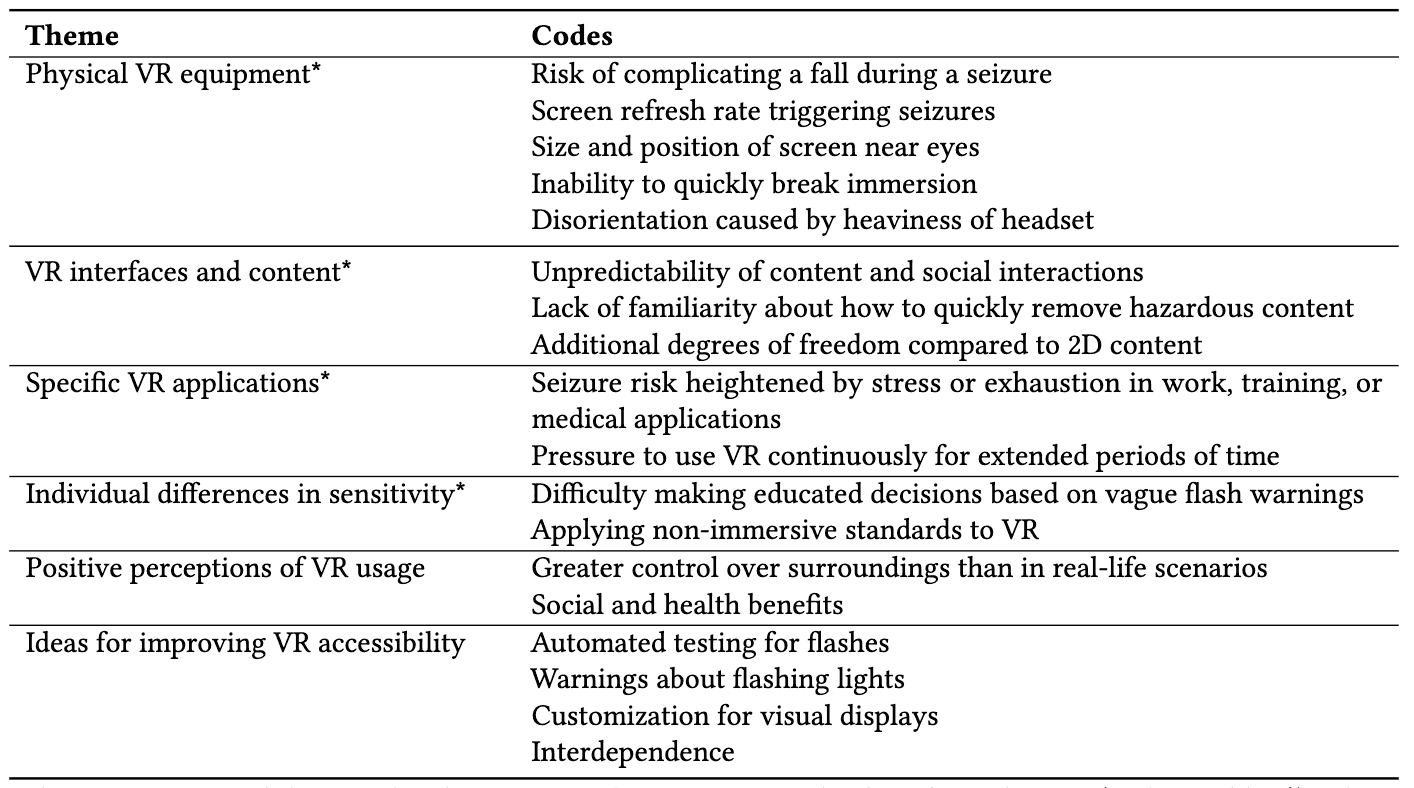

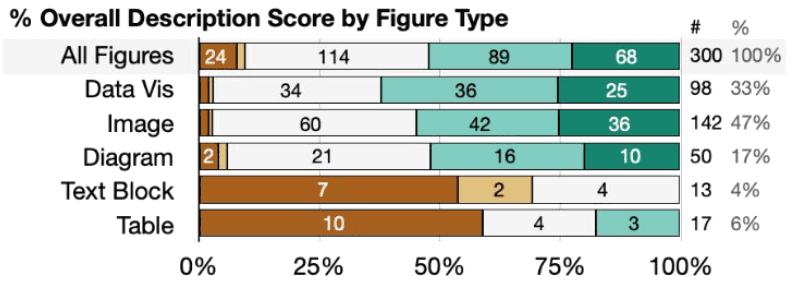

Laura South, Caglar Yildirim, Amy Pavel, Michelle A. Borkin

Conditionally Accepted to CHI 2024

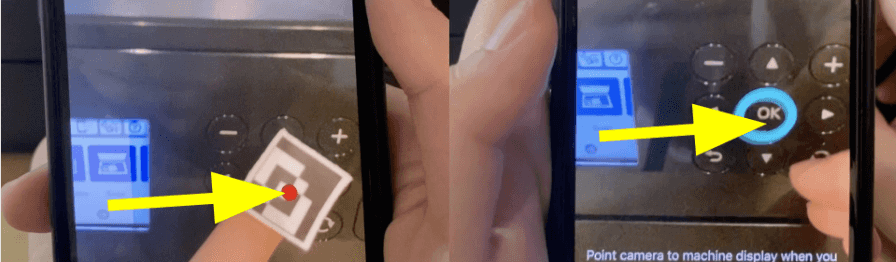

Xingyu Liu, Ruolin Wang, Dingzeyu Li, Xiang "Anthony" Chen, Amy Pavel

UIST 2022

PDF | Project Page | Video

Yasmine Kotturi, Herman T Johnson, Michael Skirpan, Sarah E Fox, Jeffrey P. Bigham, Amy Pavel

CHI 2022

Candace Williams, Lilian de Greef, Ed Harris III, Amy Pavel, Cynthia L. Bennett

W4A 2022

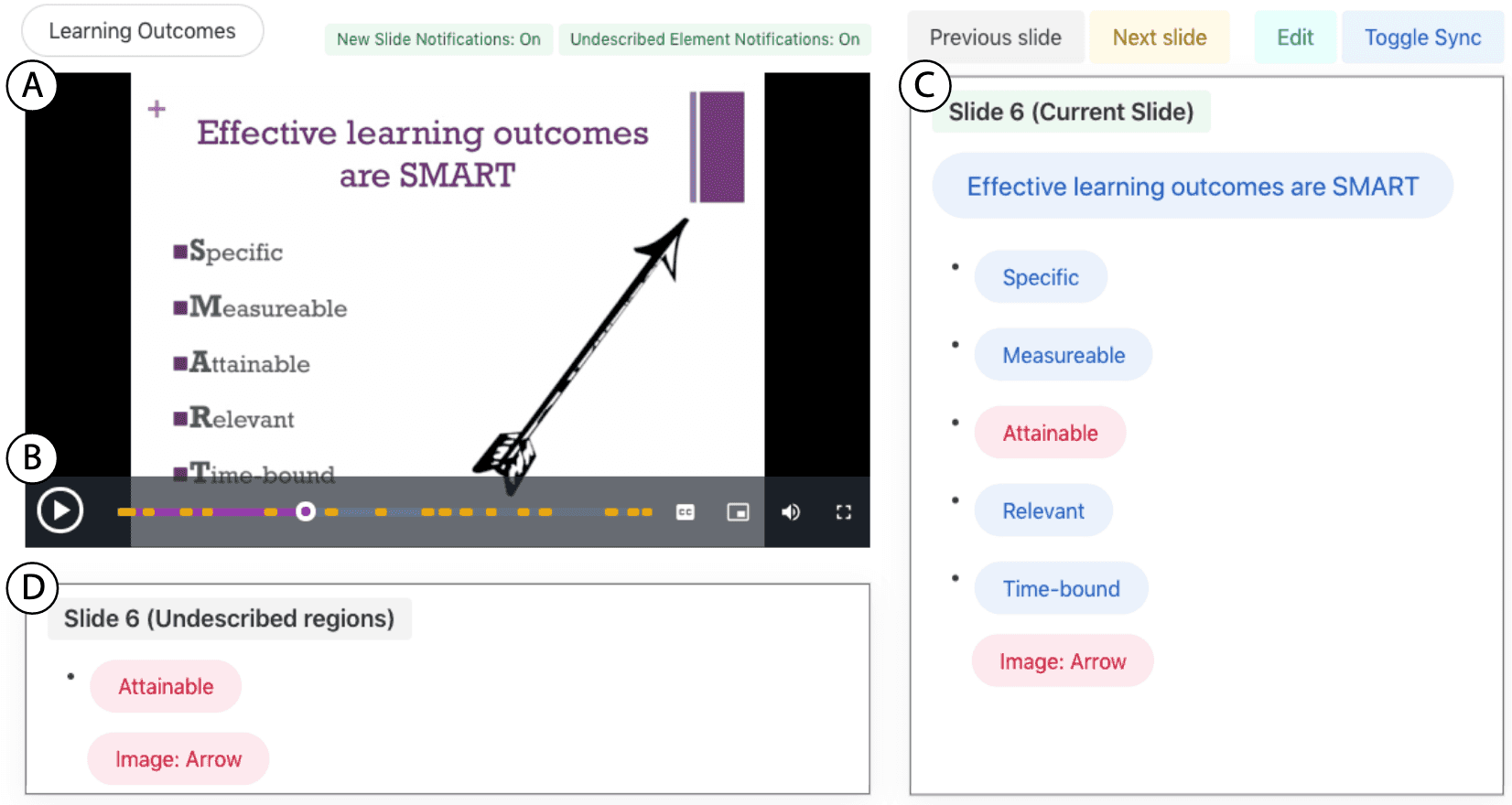

Yi-Hao Peng, Jeffrey P. Bigham, Amy Pavel

ASSETS 2021

Yi-Hao Peng, JiWoong Jang, Jeffrey P. Bigham, Amy Pavel

CHI 2021

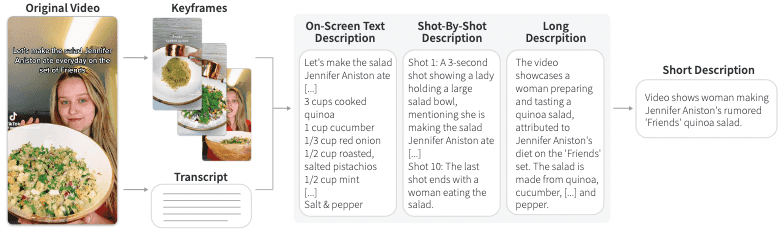

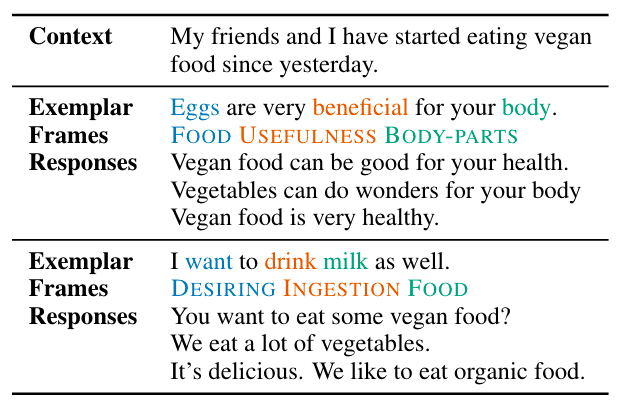

Amy Pavel, Gabriel Reyes, Jeffrey P. Bigham

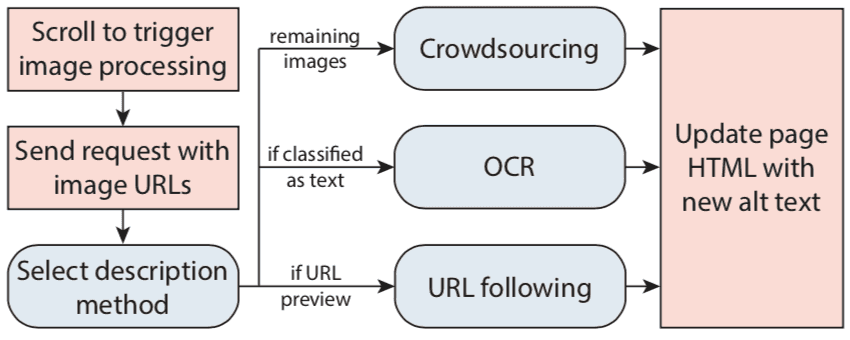

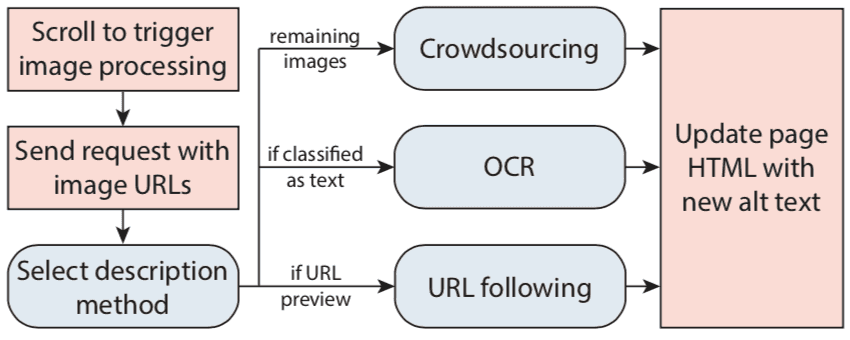

UIST 2020

Jaylin Herskovitz, Jason Wu, Samuel White, Amy Pavel, Gabriel Reyes, Anhong Guo, Jeffrey P. Bigham

ASSETS 2020

Cole Gleason, Amy Pavel, Himalini Gururaj, Kris M. Kitani, Jeffrey P. Bigham

ASSETS 2020

Cole Gleason, Amy Pavel, Emma McCamey, Christina Low, Patrick Carrington, Kris M. Kitani, Jeffrey P. Bigham

CHI 2020

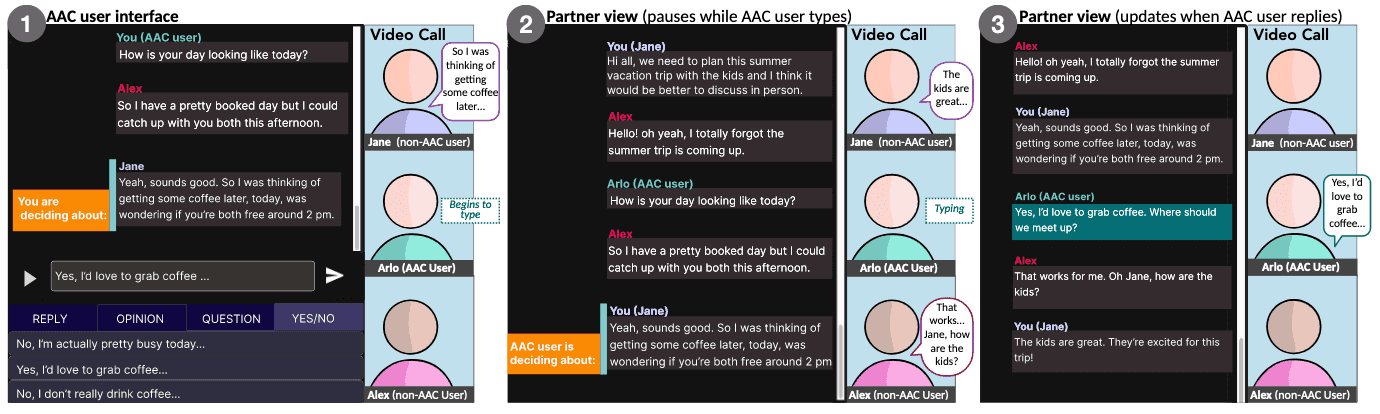

Stephanie Valencia, Amy Pavel, Jared Santa Maria, Seunga (Gloria) Yu, Jeffrey P. Bigham, Henny Admoni

CHI 2020

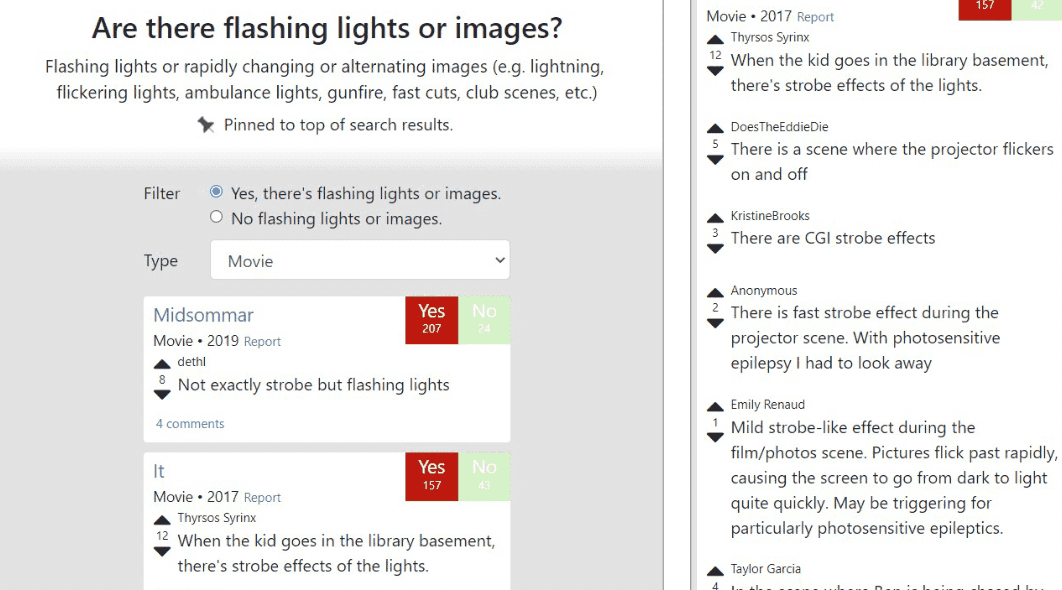

Cole Gleason, Amy Pavel, Xingyu Liu, Patrick Carrington, Lydia Chilton, Jeffrey P. Bigham

ASSETS 2019

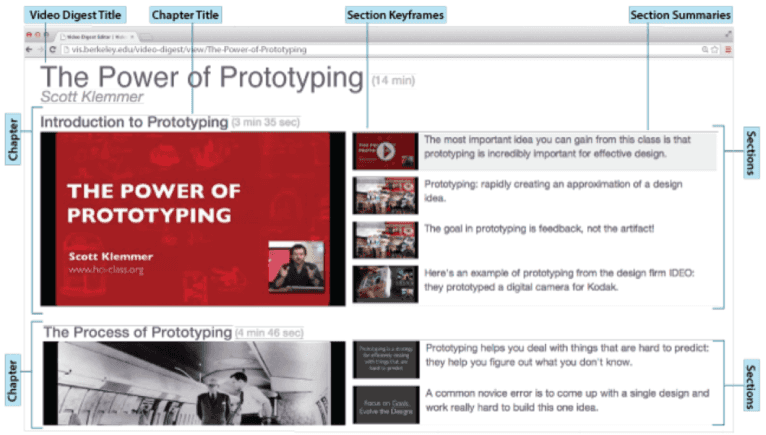

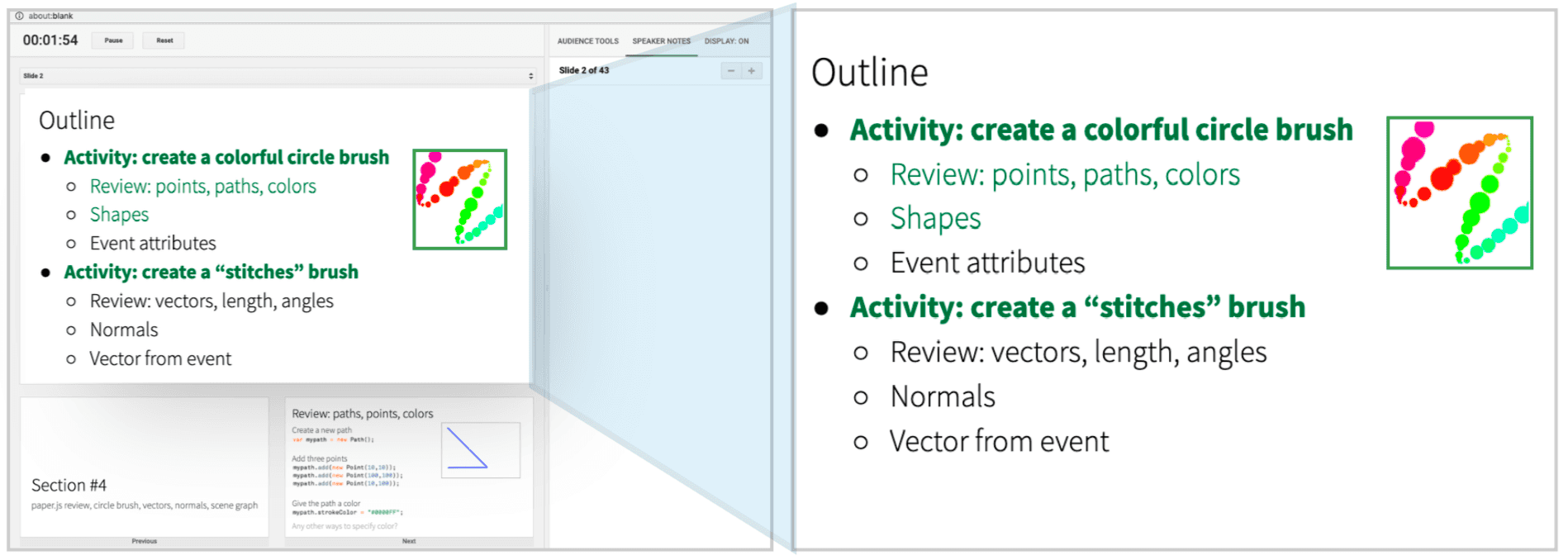

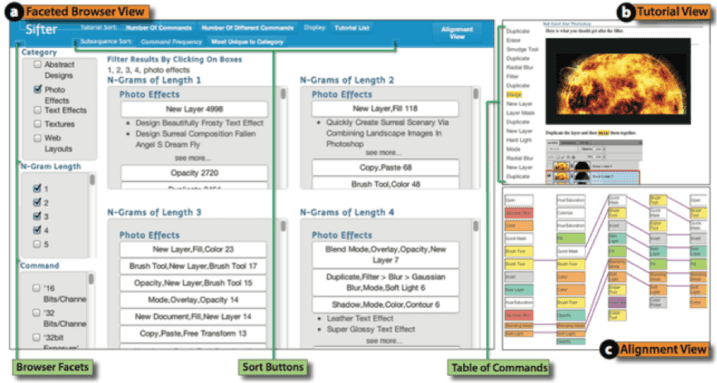

Amy Pavel, Dan B Goldman, Björn Hartmann, Maneesh Agrawala

UIST 2016

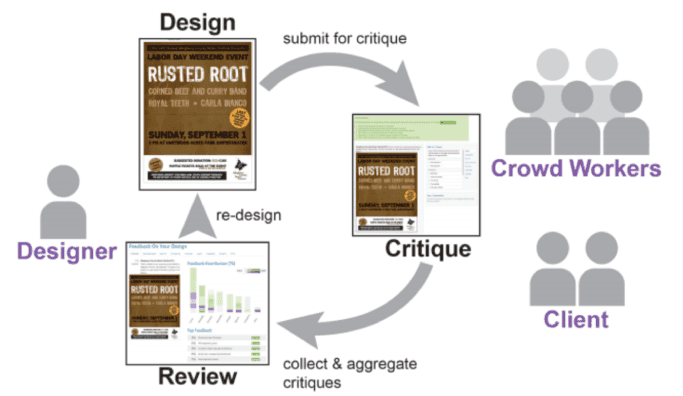

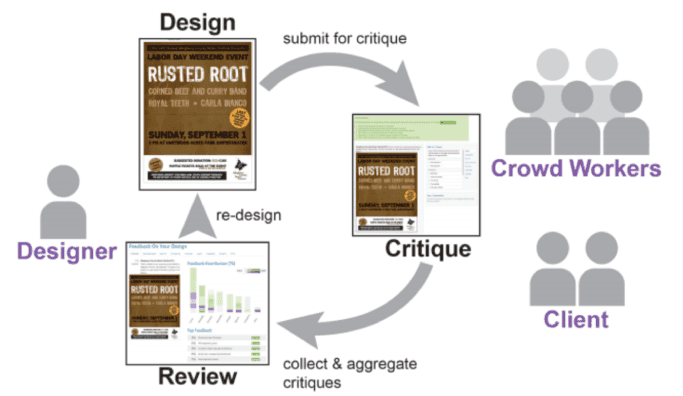

Kurt Luther, Jari-lee Tolentino, Wei Wu, Amy Pavel, Brian P Bailey, Maneesh Agrawala, Björn Hartmann, Steven Dow

CSCW 2015

Thesis and Technical Reports

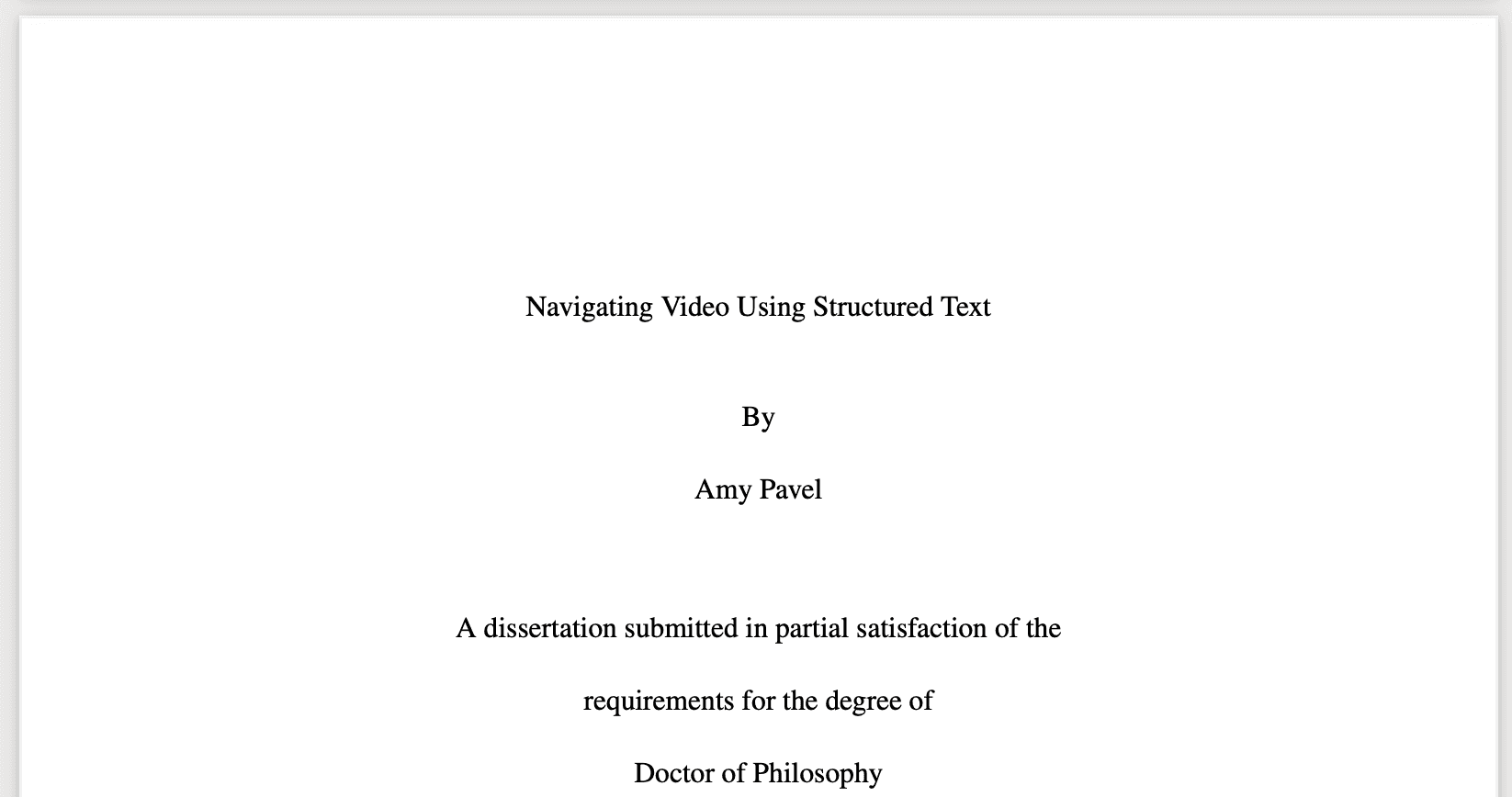

Amy Pavel

PhD in Computer Science, University of California, Berkeley

Advisors: Bjoern Hartmann and Maneesh Agrawala

Additional committee members: Eric Paulos, Abigail De Kosnik

Amy Pavel, Floraine Berthouzoz, Björn Hartmann, Maneesh Agrawala

UC Berkeley Technical Report, EECS-2013-167

Posters and Workshops

Laura South, Caglar Yildirim, Amy Pavel, Michelle A. Borkin

CHI 2023 (Extended Abstract)

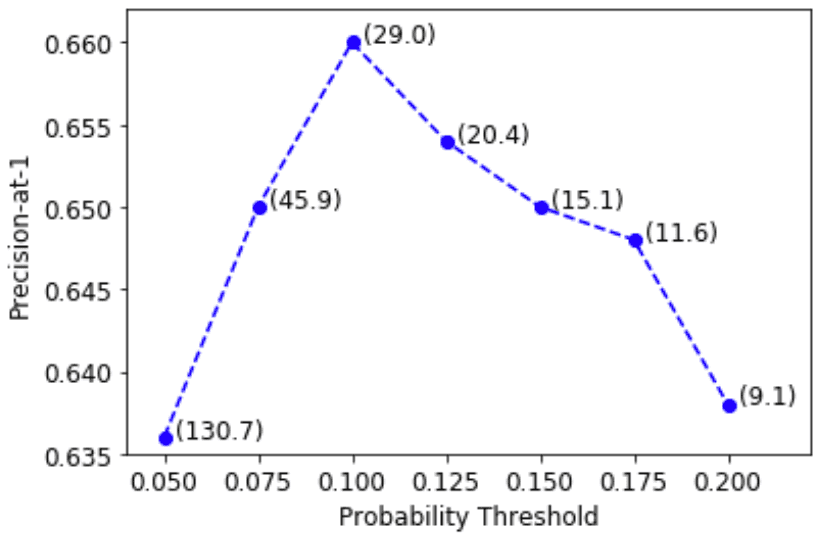

Kundan Krishna, Amy Pavel, Benjamin Schloss, Jeffrey P. Bigham, Zachary Lipton

W3PHIAI 2020 Workshop Paper

Kurt Luther, Amy Pavel, Wei Wu, Jari-lee Tolentino, Maneesh Agrawala, Björn Hartmann, Steven Dow

CSCW 2014

Work

Assistant Professor — University of Texas at Austin

Department of Computer Science

January 2022 —

Research Scientist (50% time) — Apple Inc

AI/ML

Machine Intelligence Accessibility Group

July 2019 — January 2022

Postdoctoral Fellow (50% time) — Carnegie Mellon University

HCII

Supervised by Professor Jeffrey P. Bigham

January 2019 — October 2021

Graduate Researcher — UC Berkeley

Visual Computing Lab

Advised by Professors Björn Hartmann and Maneesh Agrawala

September 2013 — January 2019

Research Intern — Adobe

Creative Technologies Lab

Advised by Principal Scientist Dan Goldman

Summer 2014, Summer 2015

Undergraduate Researcher — UC Berkeley

BiD Lab, Visual Computing Lab

Advised by Professors Björn Hartmann and Maneesh Agrawala

June 2011 — September 2013

Teaching

Instructor — UT Austin

CS 395T: Human-Computer Interaction Research

Fall 2023

Instructor — UT Austin

CS 378: Introduction to Human-Computer Interaction

Spring 2023

Graduate student instructor — UC Berkeley

CS 160: User interface design and development

Summer 2017

Teacher — UC Berkeley

Berkeley Engineers and Mentors

2009 - 2010